Google & AI

Jakub Porzycki

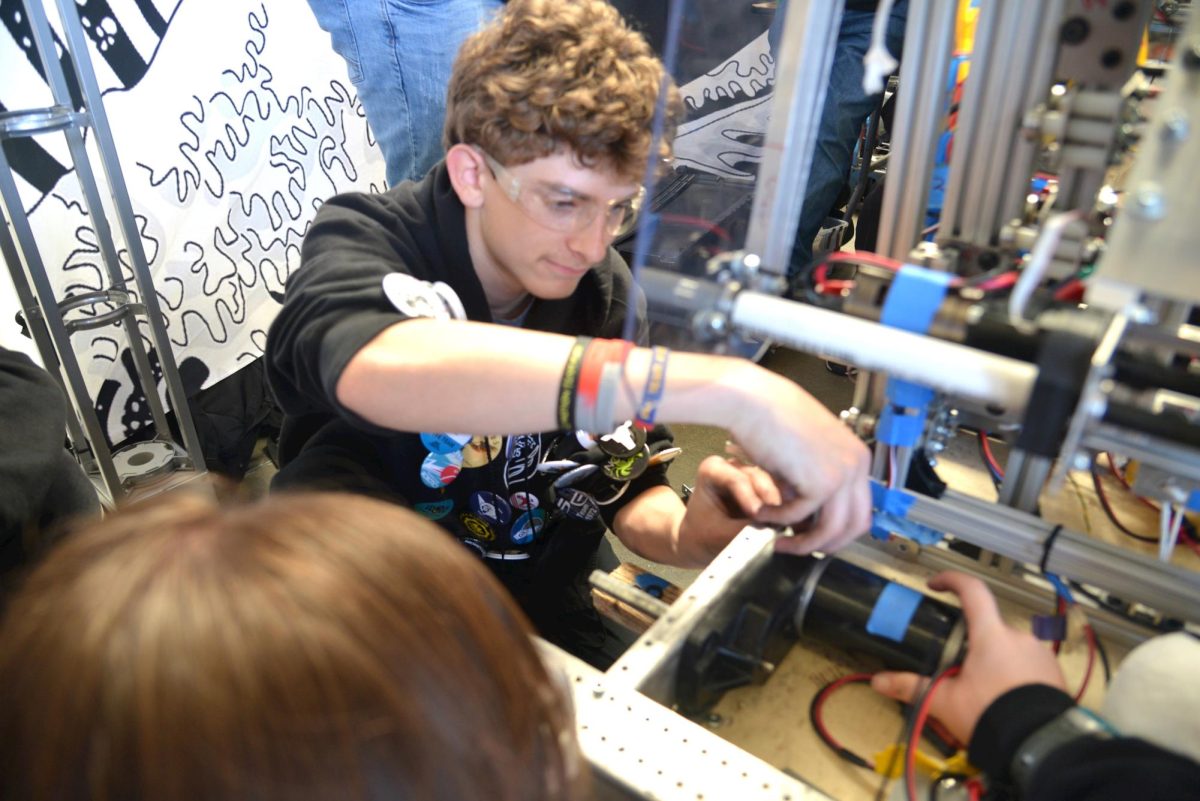

Google and artificial intelligence: What is to come? With the introduction of Google’s newest AI chatbot, Bard, it seems like much is to come, in terms of societal acquisition of knowledge. Yet many questions are raised through these new AI developments. And we are left to ask: is artificial intelligence safe?

Is there a limit to what the human mind can achieve?

Will there be a time where human intelligence succumbs to artificial intelligence, where personal knowledge becomes obsolete? And has that time already arrived, with the introduction of artificial intelligence into our most frequented search engines?

Bard, Google’s AI chat bot, is the newest form of artificial intelligence on the scene. Bard has microchips that are 100 times faster than the human brain, which, so to speak, allow Bard to acquire new information far more quickly than a human could. News articles that would take humans minutes to read would take an intelligence like Bard mere seconds.

Bard is different from Google’s ordinary search engine, as this intelligence does not search the internet for answers. It is entirely self taught.

Over the course of several months, Bard read almost everything that the internet held. Possessing this kind of knowledge is not humanly possible. Bard provides an insight on many issues that no individual could, by possessing the sum of “all human knowledge”, past and present. Bard even created a model for what language typically looks like, and the answers it gives out come directly from this model. This chatbot has the ability to try, learn, and predict.

Google’s internet dominance was allegedly ‘challenged’ when Microsoft developed their own AI chatbot in February. So, naturally, Google had to develop Bard as immediately as they could to one-up their competitors. This was interesting to me. Was the development of this new chatbot simply an afterthought? Was it just a way to secure their technological power? Was there even a consideration to how these increased advances in AI would influence society? Or was it simply a race for dominance, as everything seems to be. It makes me think back to the moon landing, where America fought to beat Russia to the moon. Has the speed of advancement increased due to need, or rather, is it due to want?

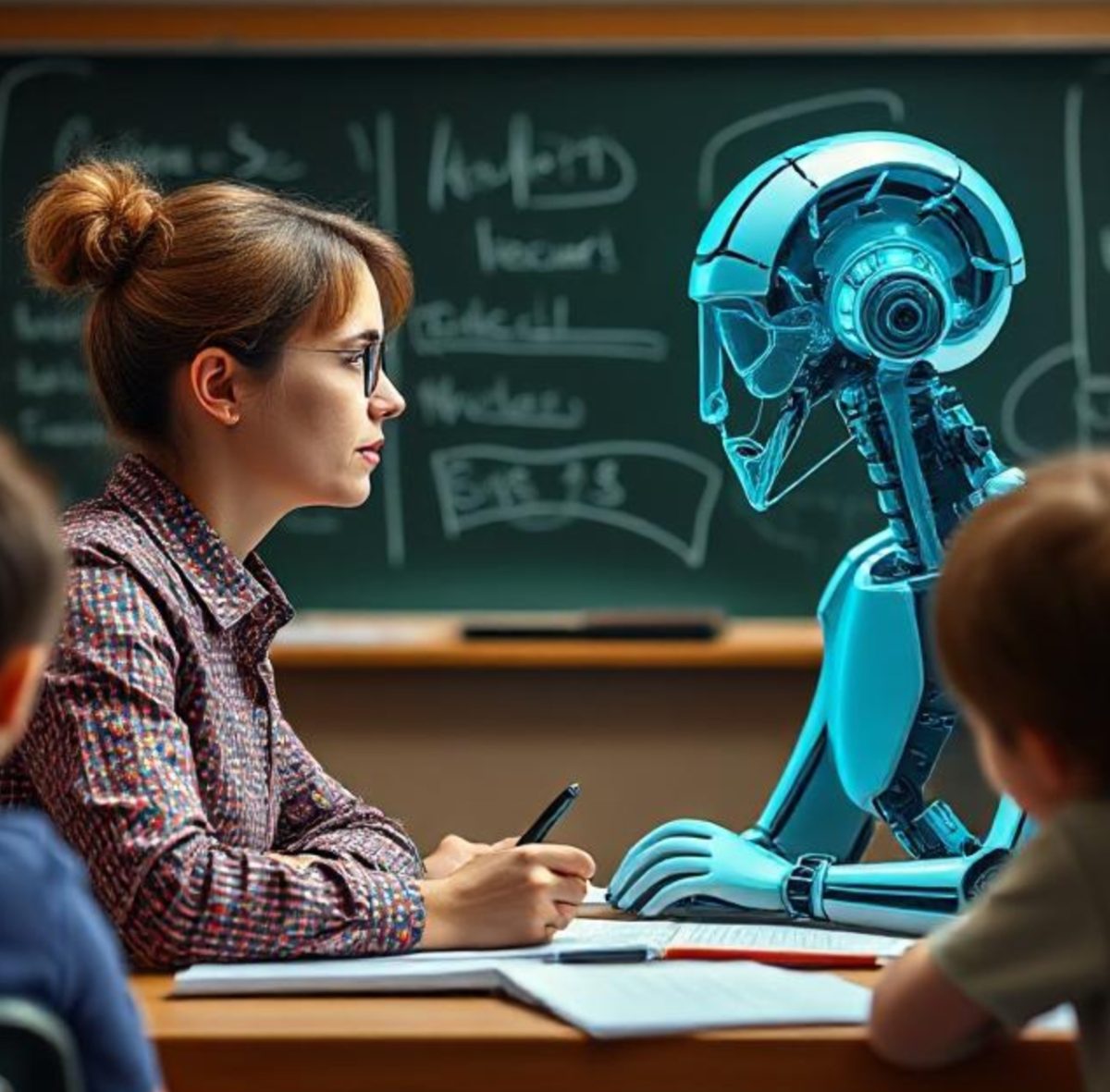

“[AI] gets at the essence of what intelligence is, what humanity is”, says Sundar Pichai, the CEO of Google.

Yet I am conflicted by this statement. How can an artificial intelligence understand humanity? How can an inhuman entity possibly know what it means to be blissfully human?

When asked why it helps people, Bard answered: “Because it makes me happy”.

How can this statement be anything less than unsettling? An inhuman creation is claiming to have real, human emotions. It is claiming to feel contentment, to feel happiness.

“We’re sentient beings. We have beings that have feelings, emotions, ideas, thoughts, and perspectives”, Google Senior Vice President James Manyika says.

I previously believed that an artificial intelligence could never replace humans, because we have real emotions and unique perspectives. Yet these chatbots like Bard, who have the ability to learn for themselves, are able to master emotion through educating themselves about the human condition. This was confusing to me. It makes me think: can emotion be fabricated? Is the line between human and machine becoming more blurry?

Although the prior is true, scientists have assured that there is no one ‘behind the mask’. There is no human behind this intelligence. Bard simply has the uncanny ability to form patterns from knowledge, and replicate human emotions in its words. Does this mean that Bard’s emotion is true, that it is honest? That is a question I stand answerless to.

In addition to Bard, Snapchat, a popular social media app, has recently introduced an AI feature called ‘My AI’ to everyone’s account. This was a resource that appeared unannounced on Wednesday afternoon, to the confusion of many users. It was first available to Snapchat + users, but now it is available to every individual who uses the app.

Many users, however, have found the comments that ‘My AI’ makes to be creepy, and even horrifying. I asked a few individuals who actively use Snapchat about their opinions on this new feature.

“It’s creepy,” Sophia Krich says. “It’s really unnecessary, and difficult to remove.” I’ve found the same to be true with my experience. I was able to remove ‘My AI’, but it took me several tries to figure out how. I even tried to ask the chatbot how to remove itself, and I never received a clear answer. It feels like a strange marketing technique for this social media app.

“The AI bot knows where I am, and I don’t even have my location on,” Meilani Molina says. A few of my friends have had this same experience, where they have asked ‘My AI’ about the weather, for instance, and the chatbot replies with the weather where they currently are. It seems strange that this artificial intelligence is allowed to have direct, personal information about us, like our locations. Did signing up for a social media app mean giving them a look into my private life? I suppose these are the questions we must be asking ourselves now.

Although, there are some aspects of artificial intelligence that can be used to serve humanity and do good, it seems. DeepMind technologies, a company that was sold to Google in 2014, is using AI to make certain scientific processes more efficient.

DeepMind created an AI program that creates 3D structures of proteins. It would take a typical scientist their entire career to find one 3D structure of a protein. Yet with the new AI program, they have actually documented the 3D structures of all 200 million proteins within the past year. This same AI program has been helpful in developing vaccines and antibiotics, which can greatly aid humanity. AI is even helping to fight climate change, by developing new enzymes that eat plastic, and through doing this, eliminate waste.

Is society prepared for what’s coming? Is society prepared to have AI be present in every area of our lives? Can we change what’s to come, or is it simply inevitable?

I feel as though the rate at which the human race is evolving and the way that technology is evolving are not exactly linear. The rapidity of technology’s evolution shocks me. It seems as if the creators of these AI programs have no concerns whatsoever about what this means for society.

People have the capacity to be replaced by AI, by machines. It is said that many ‘knowledge workers’ could be impacted by advancements in AI, careers such as writers, accountants and architects.

I believe that there will always be a space for humans in every workforce, especially in these careers. Yet as an individual who is planning on studying English and Creative Writing in college, I am fearful for my future. What will it mean if a robot could take my job? Perhaps I may write a better story. Yet an AI program may be able to write ten stories in the time where I could write one. When did we allow efficiency to take precedence over quality? When did we feel the need to develop and adhere to a fast- paced society?

There are even errors present in Bard right now. A few employees have even quit due to strong feelings about AI—feeling as though this program is being developed far too fast. When asked about inflation, AI wrote an instant article, and also recommended five books to the user. Yet upon searching up these titles, nothing was found.

Essentially, Bard had manufactured titles to books that didn’t really exist. This kind of error is called a ‘hallucination’, something that is said to come naturally with the creation of these AI programs.

Yet weren’t these very programs created to mimic precision, to mimic what the human mind could never be: perfect? These errors demonstrate how little our world truly knows about AI, and foreshadows the danger that is to come.

Much of this danger has happened already. Jennifer DeStefano, a mother of a teenage daughter, received a shocking call from who she thought was her daughter. When in reality, this call was from a scammer, who was able to replicate the voice of Jennifer’s daughter exactly using AI. The voice was begging for help, saying that she was kidnapped, and that the kidnappers were demanding 1 million for her release. Yet this scare was completely fabricated. Jennifer’s daughter was never kidnapped: yet scammers were able to make it appear like she was.

This is absolutely terrifying. Although Brie, the daughter, had no idea how the scammers received her voice, there are ways online to replicate and essentially ‘clone’ an individual’s voice. You are able to type what you want the individual to say, and then the audio file will appear.

This, I believe, is the dark side of artificial intelligence. These dangers could have never been present without this new technology. This is the consequence of unregulated AI growth. Things like this should not be possible. We, as humans, should not have the ability to clone and replicate someone else’s voice saying whatever it is we desire them to. These types of things only serve to cause destruction, and pit human against human.

Many scientists believe that we, as humans, only use close to “10% of our brains”. That said, there is much potential within our own minds that we have not unlocked so far.

Perhaps, for a reason.

Perhaps we, as humans, should not have access to everything. Perhaps we were created to endlessly seek solutions to the things we are passionate about, to utilize curiosity as a tool. Perhaps we shouldn’t be able to acquire these answers and resources that we have access to through using, or abusing, AI. Artificial intelligence is not a toy. Yet these new programs sure seem like games.

I can only hope that, in the future, companies like Google will begin to more readily monitor their AI chatbots. This new development of artificial intelligence as a mere “tool” may come at the expense of many livelihoods. And that is a world in which I do not desire to live.